Introduction:

Data analysis at scale requires faster and larger systems for data warehousing and processing, which definitely incurs high costs and management overhead. Google BigQuery is a cloud-based big data analytics service offered by Google Cloud Platform for processing very large read-only data sets without any configurations overhead. BigQuery was designed for analyzing data in the order of billions of rows, using an SQL-like syntax. BigQuery enables you to set your data warehouse as quickly as possible with the latency of seconds. You do not need to set up, organize and manage your data warehouse. It is integrated with many other tools in the Google Cloud Platform as well which enables you to create robust data pipelines very quickly and efficiently.

What it means to be serverless?

While there is no official community definition of being serverless, we will begin explaining the concept by stating some of the leading principles of what it means to be serverless.

Principles of serverless-ness:

-

Invisible infrastructure :

You can run your web server, some heavy-number-crunching code, or a large query and all the administrative overhead like the OS updates, networking, etc is abstracted away from you.

-

Automatic scaling

Serverless computing increases/decreases the compute capacity as needed, making sure that the resources are scaled efficiently in case of heavy workloads while still keeping check that they are not under-utilized in case of low workloads. They scale up and down very tightly with your actual usage.

-

Pay per use

Serverless computing only charges you for the time you are actually doing the processing.

Underlying Architecture of BigQuery

Storing and querying massive petabyte scale datasets can be a time consuming and expensive task without the right hardware and infrastructure. Many of you might have experienced the pain of querying heavy datasets on your local machines. BigQuery enables you to perform near real time analysis of petabyte scale datasets with the latency of milliseconds. BigQuery fits best in OLAP (analytical) workloads. It is not used for OLTP (transactional) workloads. BigQuery can be accessed via WebUI, command line tool or by making a call to BigQuery REST API using different client libraries.

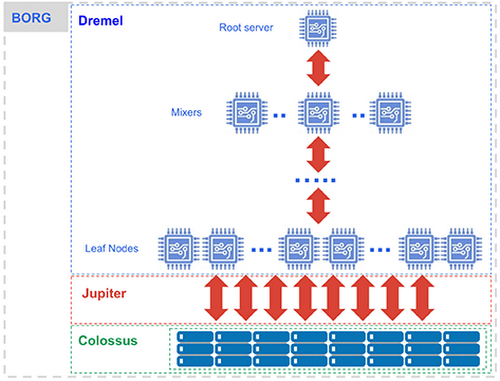

BigQuery requests are powered by the Dremel query engine (paper on Dremel published in 2010), which orchestrates your query by breaking it down into pieces and reassembling the results. BigQuery relies on Colossus, Google’s latest generation distributed file system. Google Jupiter network allows us to efficiently and quickly distribute large workloads.

Under the hood, BigQuery uses the columnar storage format. Every column is stored in a separate file and that file is encrypted and replicated. This makes read performance really fast. BigQuery is by design append-only preferred. That means that you better let duplicate rows from the same entity in the table and write your queries to always read the most recent row. You have only 100 updates per table per day. BigQuery has tight integrations with other Google cloud services like Dataproc (connector), Dataflow, Cloud Storage, Cloud Datalab, Cloud Dataprep etc.

Separation of Compute and Storage:

Separation of compute and state is the secret that makes BigQuery so good at concurrency. The separation of compute and state refers to the ability to maintain intermediate state between processing stages in a high-performance component separate from either the compute cluster or storage. There are several benefits to separating compute from state,

-

Less state in compute means compute becomes more ephemeral and scalable.

-

Keeping less state in processing nodes makes workloads more resilient to individual node issues.

-

Processing is more streamlined; processing stages don’t conflict within the same compute nodes resulting in resource contention and bottlenecks.

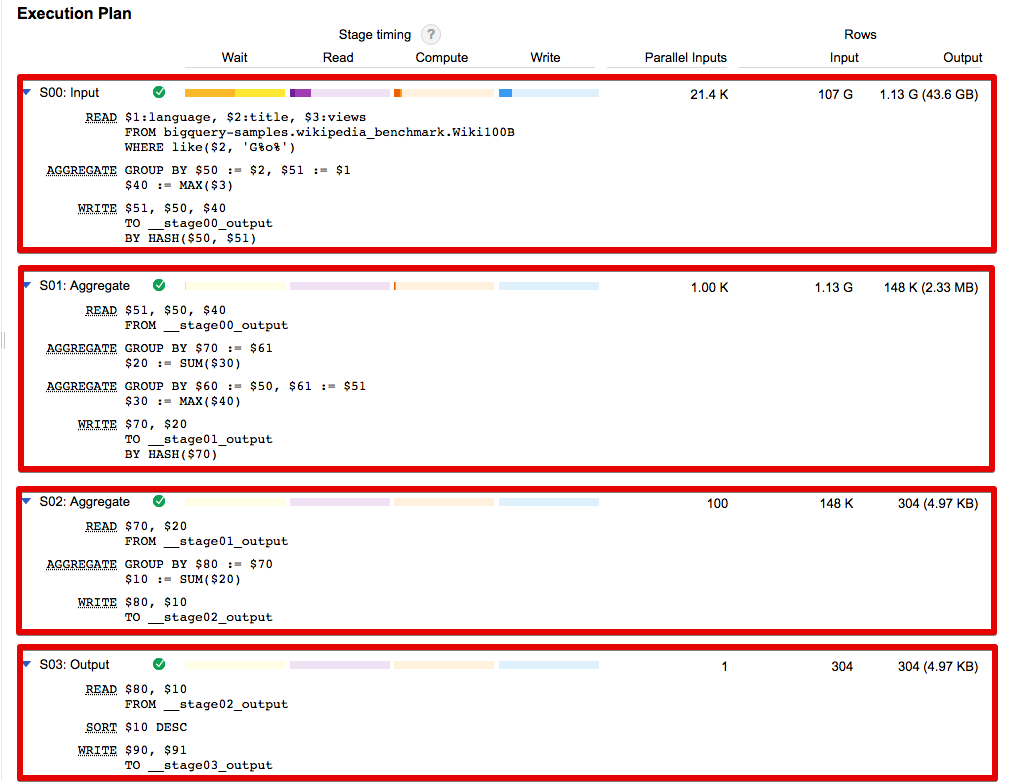

To demonstrate this concept, let’s take a look at a BigQuery query presented by Jordan Tigani in his Google Cloud Next ‘17 session:

bigquery-samples.wikipedia_benchmark.Wiki100B is the public dataset. BigQuery Supports both standard SQL 2011 and Legacy SQL.

`bigquery-samples.wikipedia_benchmark.Wiki100B` for Standard SQL

[bigquery-samples:wikipedia_benchmark.Wiki100B] for Legacy SQL

This query reveals a shuffle after both Stage 1 and Stage 2. However, rather than moving data from Stage 1 nodes to Stage 2 nodes, the query uses a separate, in-memory subservice to keep the shuffled data. This is a much cleaner architecture — BigQuery avoids the resource sloshing and bottlenecking associated with classical shuffle. It can also quickly recover from unexpected out-of-memory conditions associated with data skew in shuffle.

You can view the execution plan and timeline area chart of the query by hitting the Next button. This helps us to analyze and optimize our query at different stages, if needed.

BigQuery Data Formats:

-

Load:

CSV, JSON (Newline Delimited), Avro (Best for compressed files), Parquet, Datastore Backups (Google object storage database)

-

Read:

CSV, JSON, Avro, Parquet

-

Export:

CSV, JSON, Avro, Google Cloud Storage (gs://Bucket_name)

BigQuery Project Hierarchy and Roles:

A BigQuery Project can have multiple owners, and contains users and datasets. A dataset further contains multiple tables and views. Think of views as more restricted tables containing select columns of the original table to provide a more controlled access to different stakeholders. Access control can only be provided at project, dataset and view levels.

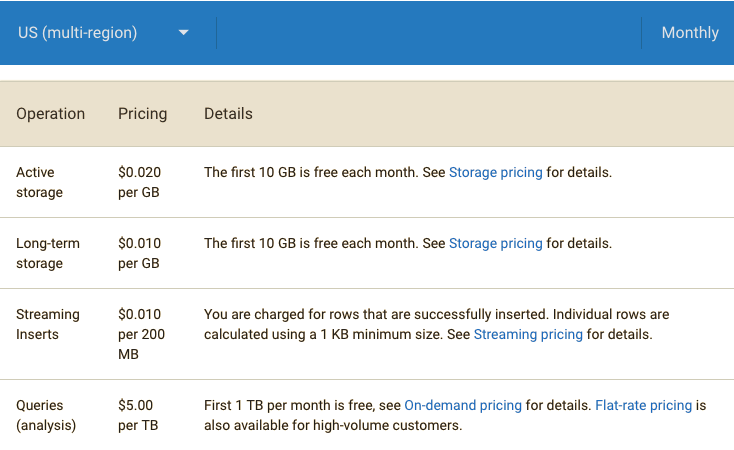

BigQuery Pricing:

BigQuery storage costs are based solely on the amount of data you store. Storage charges can be:

-

Active — A monthly charge for data stored in tables you have modified in the last 90 days.

-

Long-term — A lower monthly charge for data stored in tables that have not been modified in the last 90 days.

Query costs are based on the amount of data processed by the query. Query charges can be:

-

On-demand — The most flexible option. On-demand query pricing is based solely on usage.

-

Flat-rate — Enterprise customers generally prefer flat-rate pricing for queries because it offers predictable, fixed month-to-month costs.

Google cloud platform also offers the pricing calculator by which you can estimate the cost as per your use case.

Conclusion:

BigQuery is a fully optimized, no-ops solution for many use cases. It has the capacity to handle streaming inserts as well as some other interesting features like nested fields, structs, and partitioning tables (based on ingestion time and timestamp)

Here at Markelytics, we recently tried out BigQuery for firebase data (for a survey app) and email data for Marketo (for an auction company).

Firebase stores user data, event data and campaign data in nested records in partition tables. The UNNEST function of standard SQL proved to be very useful in getting the data ready for visualization in data studio by flattening and reshaping it which further helped build user funnels, visualize event performance and filter out performance based on specific user activity.

Marketo is a marketing automation platform which stores email related data (email opens, email sends, email clicks etc). The purpose was to perform RFM (Recency, Frequency and Monetary value) analysis. BigQuery acted as a sink for Stitch, which is a connector for Marketo and used to pull data out of the marketo platform. The serverless nature of BigQuery allowed me to process 10s of millions of rows and aggregate data in order of seconds.

As Google analytics partners we are used to getting most of our answers in warp speed without worrying about the infrastructure that powers it and we are excited to see how we can scale this approach to do this on more of client data and provide answers to broader business questions.

This is a new series of articles that takes a look at the upcoming convergence of the digital analytics world with statistical learning. Read what Umair, our statistical learning lead has to say, right here.